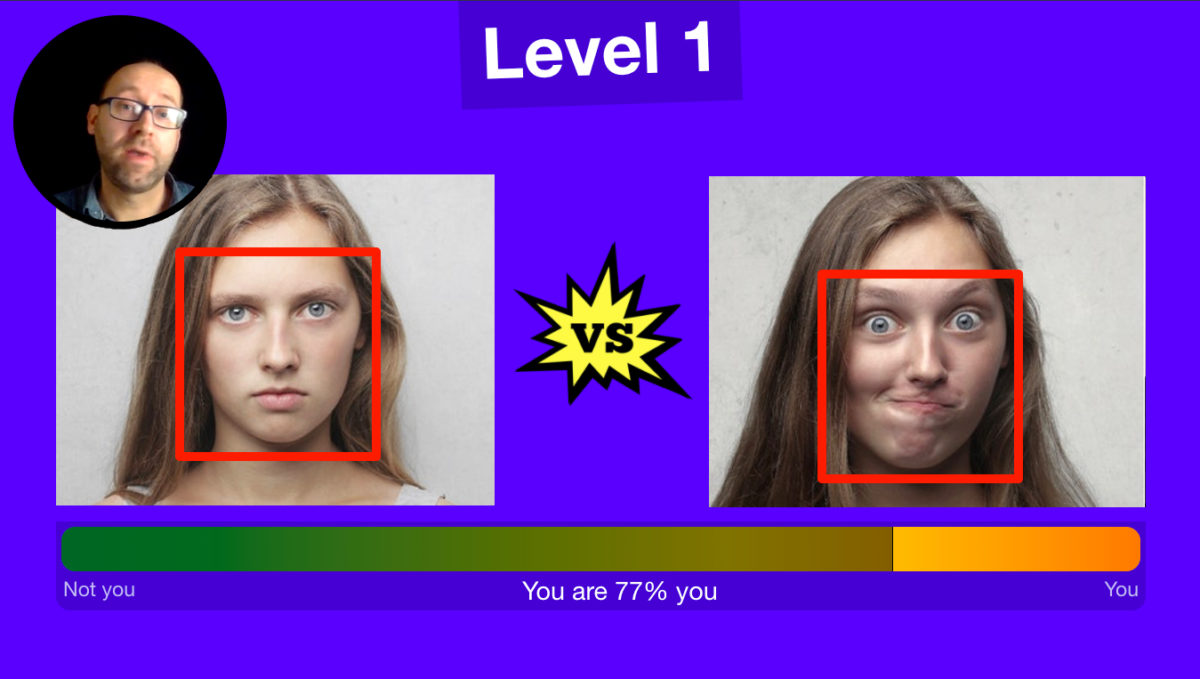

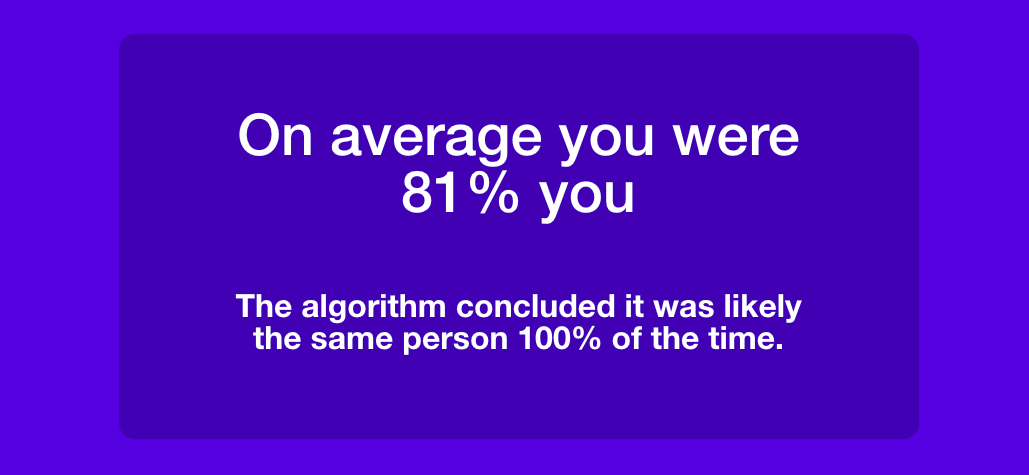

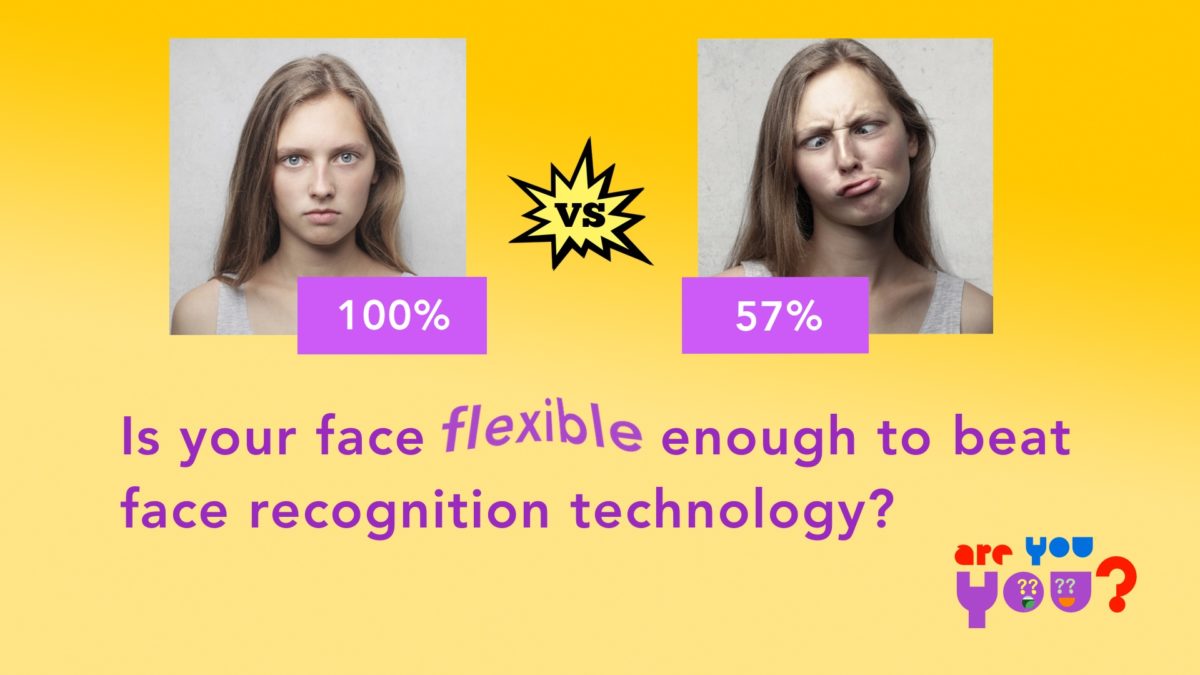

In this game the goal is to fool a face recognition algorithm by making funny faces. At the start of the game a picture of your face is taken, and for the rest of the game you try to make yourself not look like yourself by contorting your face. If you can get the match percentage below 50%, then you win.

It’s quite difficult to do, but it’s not impossible. The first main point of the project, however, is to show that these systems are by their nature dealing in likelyhoods, not absolute certainty. They output a percentage that indicates how well two faces match. So when we talk about a “perfect match”, it might refer to any match above 70%.

Issues around face recognition tech

The first issue with face recogntion technology is that a lot of institutions (e.g. the police) blindly trust the accuracy of these systems. This has already led to false arrests situations (Youtube link) in which the supposed culprit had a very tough time convincing the police officiers that they made a mistake.

A bigger issue the project tries to highlight is that even if these systems worked flawlessly, they would still be problematic. This is because the very existence of these systems can create unwanted* chilling effects in society. (*Unwanted in Europe and the USA anyway).

This could be detrimental to our democracies’ ability to evolve. If people feel they can easily be recognised wherever they go, they may be less inclined to join a protest. For example, the use of face recognition systems during the Hong Kong and Black Lives Matter protests may deter people from expressing themselves or showing solidarity if they fear it might lead to unwanted repercussions at some later date.

But even in daily life these chillng effect may arise. For example, people may view watched and controlled when they travel through cities, and may avoid certain areas because it “might look bad” if they visit those areas, and it might be a datapoint that leads to them missing a future job interview. In other words, these systems can create stress in law-abiding citizens, who might feel they have to be “on guard” more often. This is quite ironic, since security cameras are supposed to alleviate stress, not increase it.

Privacy

Are You You is a follow-up to How Normal Am I, and uses the same techniques to create a privacy protecting experience. For example, the machine learning model (AI) is run locally in the browser, so that it’s not necessary to upload images to a cloud server. The model once again comes from the ‘open source’ FaceApi.js project. How the model was trained, I do not know.

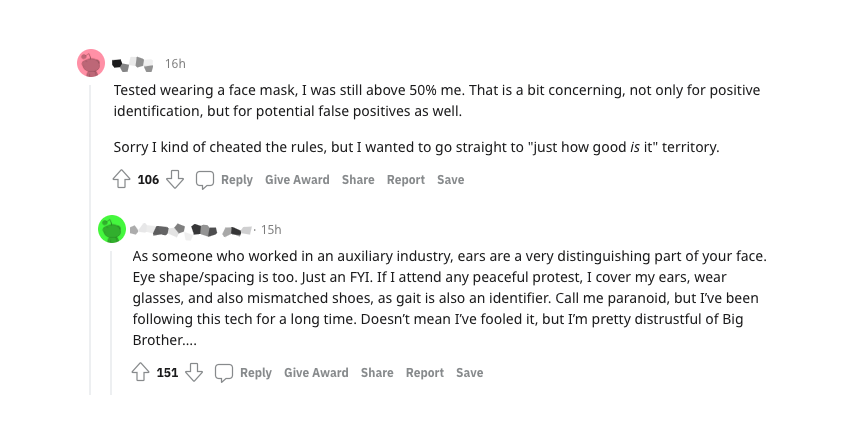

The project does store the game’s final (anonymous) score, and whether the algorithm was at any point beaten. This is because I personally have a very hard time beating the game (which is done by getting the match percentage below 50%), but for some people this is easier. I found that about 1 in 4 sessions managed to beat the game, which is more than I expected. Still, this only says something about this particilar algorithm, which is already a low-power algorithm since it has to be small so it can be downloaded quickly. Commercial systems, such as those of Clearview AI, are likely harder to fool.

In the media

The project was launched in september 2021. A fun discussion of the project occured on Reddit, where understandably the first question was “is this really privacy friendly?”. Other people used it as a tool to test how well the technology works:

Origins

The project came about when Bits of Freedom asked me to advise EDRI, a Brussels based organisation that aims to protect digital human rights, on a campaing they were running called Reclaim Your Face. They wanted to create face masks that would protect people from face recogntion. One contribution I made was to point out that such a mask could create a false sense of security with activists, as it’s very difficult to do. A better narrative might be that current face recognition is becoming inescapable, and that we need laws that limit the deployment of large scale urban biometric recognition systems. Which is why EDRI should point out the importance of lobbying for such laws.

Here I found an overlap with my work for SHERPA, an EU research consortium that explores for AI systems can better protect human rights. I proposed to make a Sherpa piece that supports the Reclaim Your Face campaign.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme, under grant agreement No 786641.